# AI usage

# Setting up Ollama

Ollama allows you to run AI neural networks locally or use their cloud versions.

Download the Ollama (opens new window) app.

Open the app and select a model. The list of models can be found here (opens new window).

Models can be local or cloud-based. Local models run entirely on your computer, but larger models require a powerful computer.

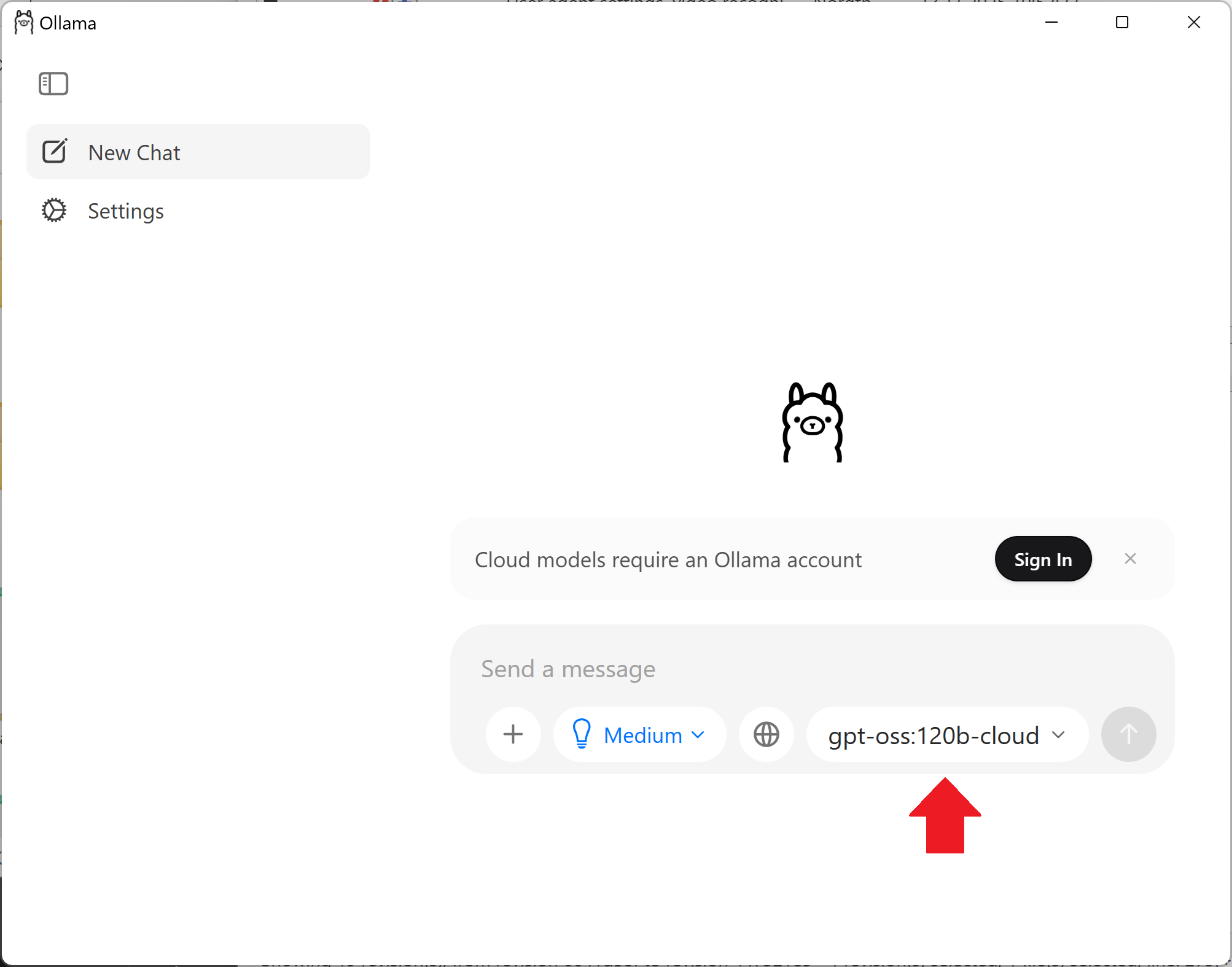

For a quick start, you can select the cloud model

gpt-oss:120b-cloudor the local modelgranite4:micro.

If you selected the cloud model, Ollama will ask you to log in.

Write a test message in the Ollama chat to ensure the model is running.

If you selected a local model, Ollama will download the model before first use (this may take a long time if you selected a larger model).

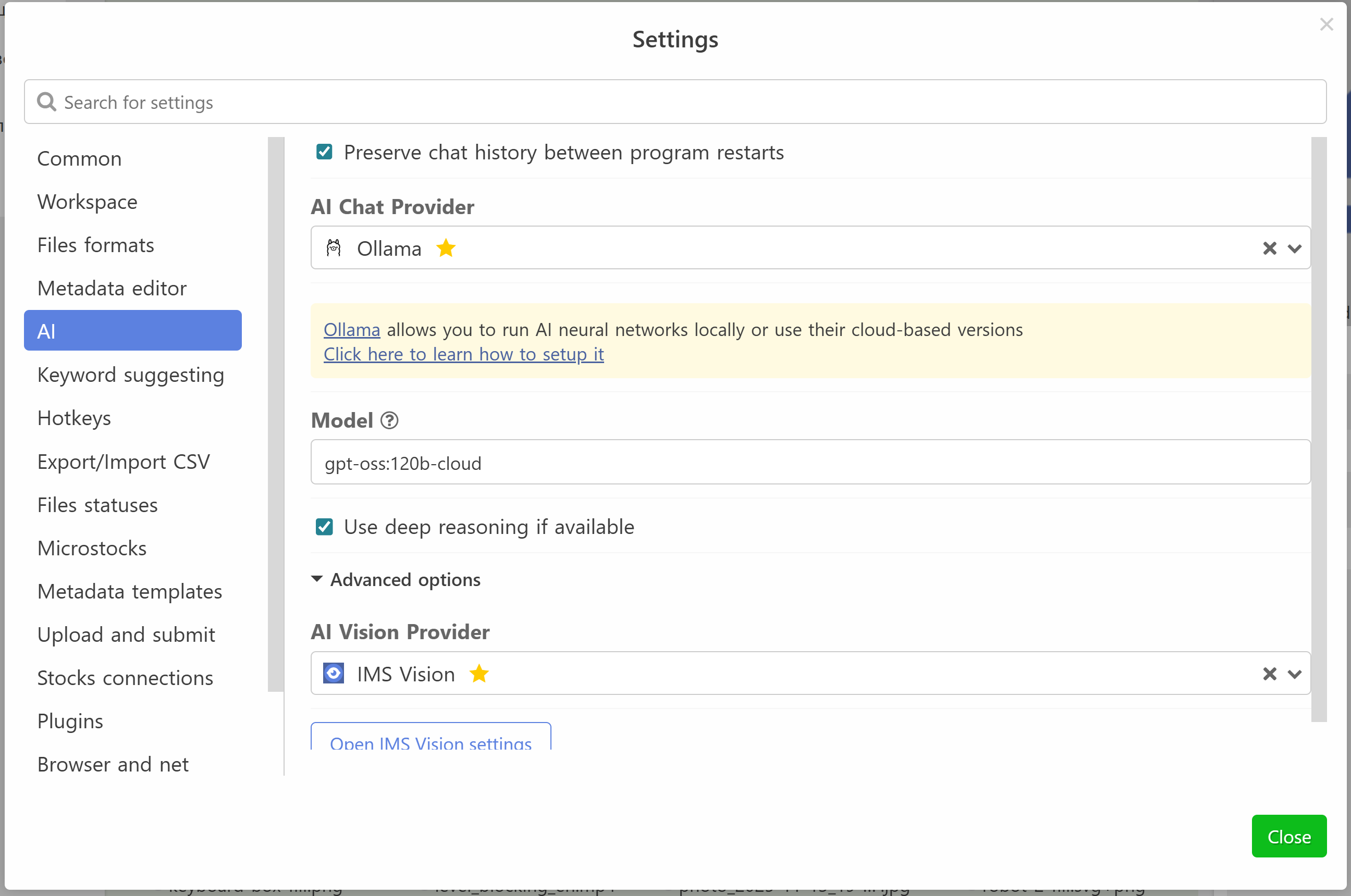

After Ollama responds to the test message, switch back to IMS AI Studio and enter the name of the selected model.

That's it, the AI chat is ready to use!